Understanding the Need for Micro-Segmentation

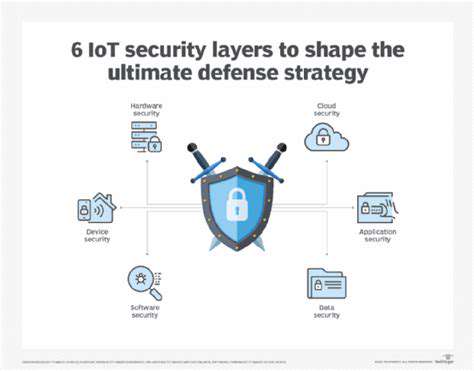

Modern network security strategies increasingly incorporate micro-segmentation as a fundamental control mechanism. This technique goes beyond traditional VLAN segmentation by establishing security boundaries around individual workloads. In practical terms, this means that even if attackers compromise one application component, their ability to pivot to other systems becomes severely limited.

Consider how modern data centers operate. Without proper segmentation, a vulnerability in a web server could provide access to backend databases. With properly implemented controls, each component communicates only through strictly defined channels, creating what security professionals call east-west protection. This approach mirrors the principle of cellular containment in biological systems, where threats are naturally contained within their initial point of entry.

The Principles of Micro-Segmentation

Effective implementation follows several core tenets. First, security teams must establish clear communication matrices defining which components need to interact. These policies often follow the principle of least privilege, where systems only receive the minimum access required for functionality. Second, controls must be dynamic enough to accommodate legitimate traffic patterns while blocking anomalous behavior.

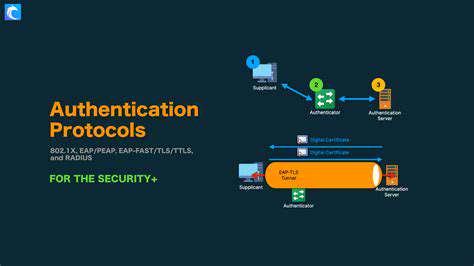

The technology stack supporting these measures typically includes next-generation firewalls with application awareness and intent-based networking systems. These solutions understand not just network addresses, but the actual purpose behind connection attempts. When properly configured, they can distinguish between legitimate database queries and potential exploitation attempts, applying appropriate security controls in real-time.

Implementing Micro-Segmentation Strategies

Deployment follows a phased approach in mature organizations. Security architects begin by creating a comprehensive application dependency map, identifying all necessary communication paths. They then work with development teams to establish security groups and tagging conventions that persist across deployment environments.

The most successful implementations combine automated policy generation with manual review processes. Tools analyze traffic patterns to suggest initial segmentation rules, which security teams then refine based on business requirements. Continuous monitoring ensures policies remain effective as applications evolve, with machine learning algorithms detecting when rule adjustments become necessary.

Benefits of Micro-Segmentation in a Zero Trust Environment

When integrated with broader security initiatives, micro-segmentation delivers several operational advantages. Security teams gain finer-grained visibility into network traffic patterns, enabling faster detection of suspicious activity. Incident responders benefit from natural containment of threats, reducing investigation scope during security events.

From a compliance perspective, these controls help organizations demonstrate due care for sensitive data. Regulators increasingly expect evidence of proper data segregation, particularly in industries handling financial or healthcare information. Auditors recognize properly implemented micro-segmentation as a compensating control when other security measures fall short of ideal configurations.

Challenges and Considerations in Micro-Segmentation

While powerful, the approach isn't without implementation hurdles. Many organizations struggle with the initial discovery phase, particularly in complex environments with undocumented application dependencies. Performance considerations also arise when security controls introduce latency into previously unimpeded communication paths.

Maintenance presents another significant challenge. As applications update and business requirements change, segmentation rules require regular review. Organizations must establish clear ownership for policy maintenance, often dividing responsibilities between security architects and application owners. Without proper governance, rules can become outdated, either creating security gaps or unnecessarily restricting legitimate traffic.

Beyond the Firewall: A Holistic Security Approach

Zero Trust: Shifting the Paradigm

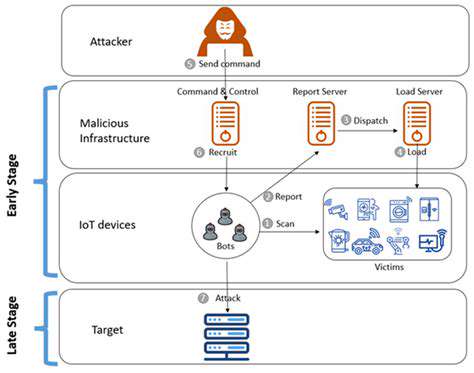

Contemporary security frameworks represent a fundamental departure from perimeter-based models. Where traditional security focused on keeping threats out, modern approaches assume breach and focus on containment. This philosophical shift requires rethinking everything from network architecture to incident response playbooks.

The transition affects organizational structures as much as technical systems. Security teams now collaborate closely with identity management specialists, while network engineers work alongside application developers. This cross-functional approach ensures security considerations inform decisions at every layer of the technology stack.

Micro-Segmentation: Isolating Threats

As part of comprehensive security strategies, workload isolation plays a critical role. Modern implementations leverage both network-based and host-based controls, creating defense-in-depth for critical assets. Security operations centers monitor these segmented environments using specialized tools that highlight unusual communication patterns.

The most advanced deployments incorporate runtime protection mechanisms that adapt to emerging threats. These systems analyze process behavior rather than relying solely on predefined rules, offering protection against previously unknown attack vectors. When combined with traditional segmentation, they create robust defenses that evolve with the threat landscape.

Enhancing Visibility and Control

Effective security in complex environments demands comprehensive monitoring capabilities. Modern security operations platforms aggregate data from diverse sources, applying correlation rules to identify potential incidents. These systems increasingly incorporate user and entity behavior analytics (UEBA) to detect subtle signs of compromise that traditional tools might miss.

Automation plays an increasingly important role in maintaining security postures. Routine tasks like log analysis and vulnerability scanning now benefit from machine learning algorithms that improve over time. However, human expertise remains crucial for interpreting results and making strategic decisions about risk management.

The most mature organizations implement continuous improvement cycles for their security programs. Regular red team exercises test defenses, while purple team collaborations ensure detection capabilities match known attack techniques. These practices, combined with thorough incident retrospectives, create organizations that learn from both successes and failures.

Ultimately, modern security requires balancing multiple priorities. Strong technical controls must coexist with user productivity, while compliance requirements inform but don't dictate security strategy. Organizations that master this balance create resilient environments capable of withstanding today's sophisticated threats while remaining agile enough to adapt to tomorrow's challenges.