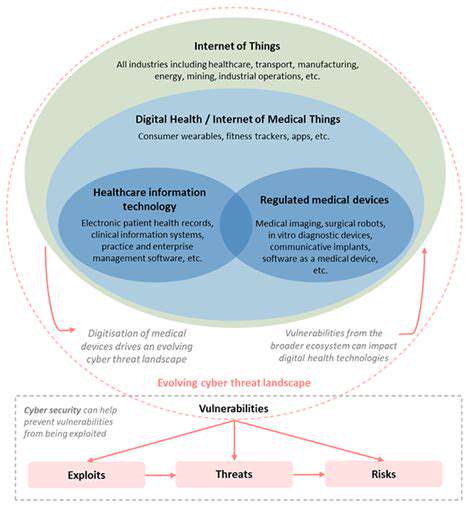

Phishing attacks are no longer confined to email. AI-driven detection systems must expand their capabilities to cover other communication channels, such as instant messaging, social media, and even phone calls. This comprehensive approach ensures that organizations are protected against a broader range of attack vectors, fostering a more robust and resilient security posture. This multi-faceted approach is vital in the modern threat landscape.

Machine Learning: Adapting to Evolving Threats

Adapting to Evolving Data

Machine learning models are designed to learn from data, but the data itself can change over time. This means that a model trained on historical data might not perform as well on new, evolving data. Adapting machine learning models to these shifts is crucial for maintaining accuracy and reliability. This requires techniques that allow the model to learn from new data without discarding the knowledge it has already gained.

One key aspect of this adaptation is continuous learning. This involves updating the model incrementally as new data becomes available, rather than retraining the entire model from scratch each time. This approach is often more computationally efficient and allows the model to retain its previously learned knowledge.

Continuous Learning Techniques

Various techniques exist for continuous learning, including online learning algorithms, which process data instances one at a time. These algorithms are well-suited for situations where data arrives in a continuous stream. Another important method is incremental learning, where the model adapts to new data by updating its internal parameters or structure.

These methods can improve model performance over time, especially in dynamic environments. The choice of technique often depends on the specific characteristics of the data and the desired level of performance.

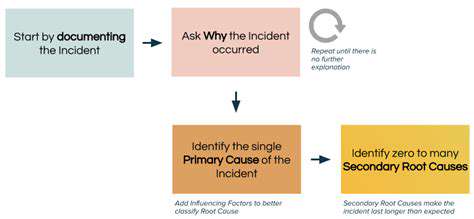

Model Monitoring and Evaluation

Regular monitoring of the model's performance is essential for detecting any significant changes in its accuracy. This involves tracking key metrics like precision, recall, and F1-score to identify potential issues. Monitoring allows for timely intervention and adjustments, preventing the model from degrading over time.

Furthermore, evaluating the model's performance on newly arrived data is crucial. This ensures that the model remains relevant and accurate in the face of evolving data patterns.

Feature Engineering and Selection

As data evolves, the importance of certain features might change. Therefore, it's important to regularly review and update the features used in the model. Feature engineering, the process of creating new features from existing ones, can be crucial for capturing new patterns in the data. New feature selection techniques can also be employed to identify the most relevant features for the evolving data.

Handling Concept Drift

Concept drift, where the relationship between features and the target variable changes over time, poses a significant challenge to machine learning models. Addressing concept drift requires strategies that allow the model to adapt to these shifts. Techniques like ensemble methods, where multiple models are combined, can improve robustness against concept drift.

These techniques allow the model to better handle the changing patterns in the data, preventing a decline in accuracy. Additionally, periodic retraining of the model can be necessary to adapt to substantial changes in the underlying relationships.

Reinforcement Learning for Adaptation

Reinforcement learning (RL) offers a powerful approach for adapting to evolving environments. In RL, the model learns to make optimal decisions by interacting with an environment and receiving feedback. This feedback can be used to adjust the model's strategy as the environment changes.

RL's ability to learn in dynamic environments makes it particularly suitable for situations where the relationship between actions and outcomes is constantly evolving. This adaptability is essential for maintaining optimal performance in complex, dynamic systems.