Real-Time Monitoring and Adaptive Response with Machine Learning

Real-Time Data Acquisition and Processing

Real-time security monitoring hinges on the ability to rapidly acquire and process data from various sources. This includes sensor data, network traffic logs, system event logs, and even user behavior patterns. Sophisticated data pipelines are crucial to efficiently collect this data and transform it into a format suitable for machine learning algorithms. This process involves data cleaning, normalization, and feature engineering to ensure the data is accurate, consistent, and ready for analysis, all while maintaining real-time responsiveness.

The speed and accuracy of this initial processing stage directly impact the effectiveness of the subsequent machine learning models. Efficient data ingestion and transformation are vital for detecting anomalies and threats in real-time, allowing for immediate, adaptive responses. Failure to adequately manage data volume and velocity can lead to missed opportunities for threat detection.

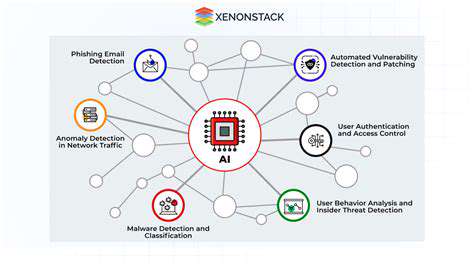

Machine Learning Models for Anomaly Detection

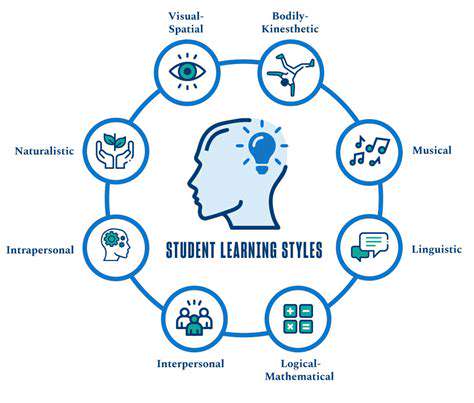

A critical component of real-time security monitoring is the implementation of robust machine learning models capable of identifying anomalies in the collected data. These models can range from simple statistical methods to complex deep learning architectures, each with varying strengths and weaknesses. Choosing the appropriate model depends on the nature of the data and the specific security threats being addressed.

For example, Support Vector Machines (SVMs) can excel at identifying complex patterns in high-dimensional data, while recurrent neural networks (RNNs) are well-suited for analyzing sequential data like network traffic flows. Careful consideration of the model's complexity and training requirements is essential to ensure it can operate in real-time without impacting system performance.

Adaptive Response Mechanisms

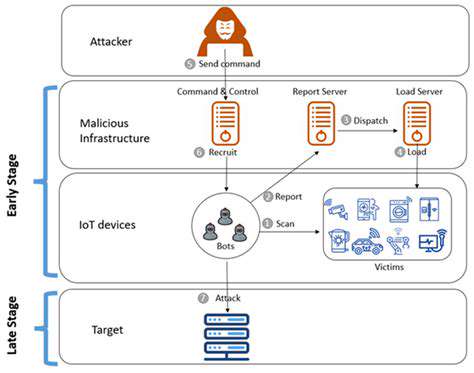

Once anomalies are detected, the system must trigger appropriate responses. This could involve blocking malicious traffic, quarantining compromised systems, or initiating automated incident response procedures. The response mechanisms must be adaptable and dynamic to account for evolving threats and the unique characteristics of each detected anomaly.

A key aspect of adaptive response is the ability to learn from past incidents. By analyzing the characteristics of detected threats and the effectiveness of implemented responses, the system can continuously refine its algorithms and improve its overall security posture. This iterative learning process is essential for maintaining a proactive and effective security strategy.

Integration with Existing Security Systems

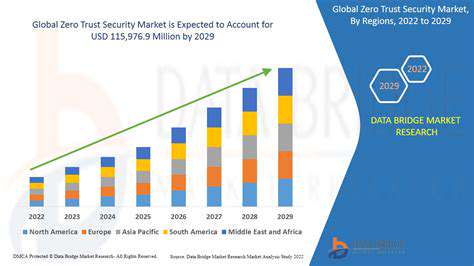

Integrating machine learning-based security monitoring with existing security infrastructure is crucial for seamless deployment and operation. This integration should involve minimal disruption to existing systems and allow for smooth data exchange between different security tools and platforms. This smooth integration helps ensure the security solution complements existing infrastructure rather than creating a siloed system.

The integration process should also consider scalability and maintainability. As the volume of data and the complexity of threats increase, the system must be capable of scaling to handle the increased workload without compromising performance. Clear documentation and well-defined interfaces are paramount for long-term maintainability and future development.

Evaluating Model Performance and Accuracy

Regular evaluation and testing of the machine learning models are critical to ensuring their accuracy and effectiveness. This includes using appropriate metrics to assess model performance, such as precision, recall, and F1-score. Regular monitoring of these metrics allows for timely identification and remediation of potential issues.

Furthermore, ongoing analysis of false positives and false negatives is essential for model refinement. By understanding the reasons behind incorrect classifications, security teams can improve model training and enhance the system's ability to accurately differentiate between benign and malicious activities. A robust evaluation process ensures the long-term reliability of the security system.

Security Considerations and Ethical Implications

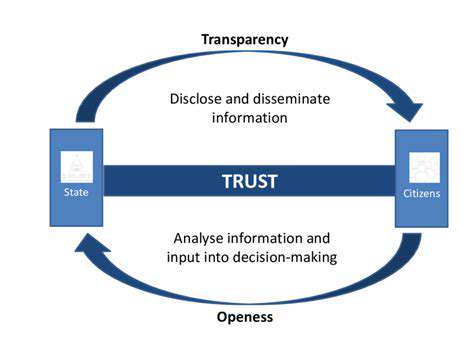

Implementing AI-driven security systems necessitates careful consideration of security implications and ethical concerns. Data privacy, bias in algorithms, and potential misuse of the system must be addressed to ensure responsible deployment. Robust security measures to protect the sensitive data used to train and operate the system are crucial.

Transparency in the decision-making processes of the AI systems is also important. Understanding how the system arrives at its conclusions is essential for building trust and ensuring accountability. Thorough ethical review and ongoing monitoring are crucial for maintaining the integrity and responsible use of AI-driven security solutions.