Modern cities are witnessing an unprecedented expansion of surveillance technologies, transforming how urban spaces function. This shift stems from multiple drivers: escalating public safety demands, crime reduction priorities, and the push for smarter city governance. Walk down any major street today, and you'll encounter a web of CCTV cameras, facial recognition systems, and even drone surveillance - tools that were science fiction just two decades ago. This technological infiltration fundamentally reshapes the contract between citizens and their urban environments.

While these systems generate terabytes of data daily, their true impact extends beyond mere information collection. The psychological effect of constant observation creates what sociologists call the panopticon effect - behavioral modification through perceived surveillance. Urban dwellers now navigate spaces where every action might be recorded, analyzed, and potentially used in ways they can't anticipate. This reality demands urgent conversations about technological boundaries in public spaces.

Privacy Concerns and Ethical Dilemmas

As surveillance networks expand, they create invisible tension lines between security and personal freedom. Consider London's Ring of Steel - a surveillance web so dense it tracks vehicles through the entire city center. While effective for counterterrorism, such systems also capture millions of innocent movements daily. The ethical dilemma emerges when we realize most citizens don't know how long their data is stored, who accesses it, or for what secondary purposes it might be used.

These systems often employ machine learning algorithms that claim objectivity but frequently mirror societal biases. Studies show facial recognition systems misidentify ethnic minorities up to 10 times more frequently. When such flawed technology informs policing decisions, it risks automating discrimination at scale. The lack of transparency around these systems compounds the problem, leaving citizens vulnerable to decisions they can't see or challenge.

The Impact on Urban Life and Social Interactions

Surveillance doesn't just watch cities - it changes how they function. Psychologists observe that constant monitoring creates chilling effects on public behavior. Protesters might avoid gatherings, artists might censor street performances, and ordinary people think twice before helping strangers. The very spontaneity that makes cities vibrant risks being suffocated by the awareness of being perpetually watched.

Urban planners now grapple with an unexpected challenge: designing public spaces that feel both safe and free. The traditional solution - more cameras - often backfires, creating fortress-like environments that deter the casual social interactions vital for community building. Barcelona's superblock initiative offers an alternative approach, using smart sensors that collect aggregate data without identifying individuals - proving innovative solutions exist when we prioritize human experience over surveillance.

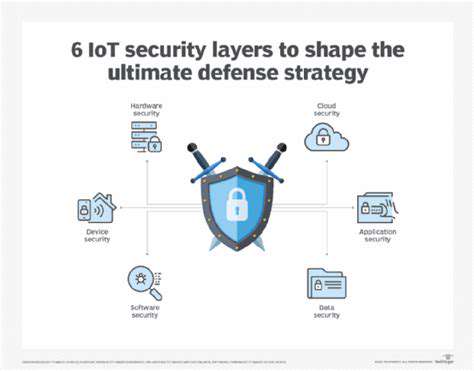

Strategies for Responsible Surveillance Implementation

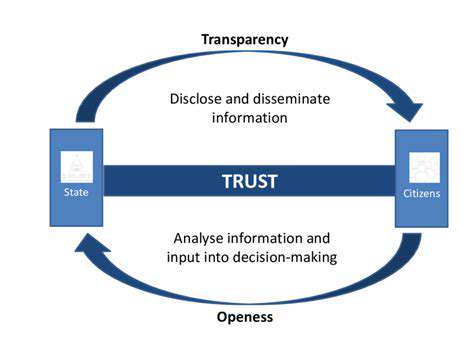

Effective governance of urban surveillance requires more than technical fixes - it demands institutional innovation. Singapore's surveillance framework, while extensive, includes sunset clauses requiring regular legislative review. This creates natural checkpoints where citizens can demand evidence of effectiveness before systems are renewed. Such models show how democracies can balance security needs with public oversight.

Technology itself can also provide solutions. Differential privacy techniques allow systems to detect anomalies while protecting individual identities. Zurich's traffic monitoring system uses this approach, identifying dangerous intersections without tracking specific vehicles. These examples demonstrate that with political will and technical creativity, cities can have security without sacrificing fundamental freedoms.

Ethical Considerations and the Need for Public Dialogue

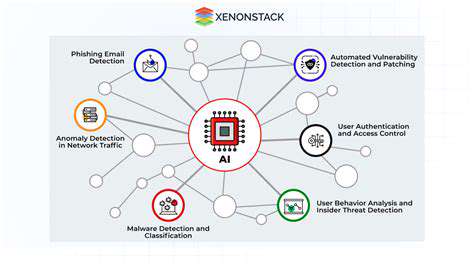

Ethical Considerations in AI Development

The AI revolution brings promises of medical breakthroughs and climate solutions, but also shadows of uncontrolled technological power. As systems grow more autonomous, we must ask not just what they can do, but what they should do. The most urgent ethical challenge isn't building smarter machines, but ensuring they serve human values rather than corporate or government interests.

Consider healthcare AI that prioritizes profitable patients over needy ones, or hiring algorithms that reinforce gender stereotypes. These aren't hypotheticals - they're documented cases showing how technology amplifies existing power imbalances. True ethical AI requires going beyond technical fixes to address the economic and political systems that shape technology's use.

Bias and Fairness in AI Algorithms

Algorithmic bias isn't a software glitch - it's a mirror reflecting our societal fractures. When a mortgage algorithm systematically disadvantages minority neighborhoods, it's not creating new discrimination but automating old patterns. The solution requires more than better data - it demands diverse teams who can spot these patterns before they're coded into systems.

Boston's AI fairness lab pioneered an innovative approach: stress-testing algorithms with bias penetration tests similar to cybersecurity audits. By intentionally trying to make systems discriminate, they uncover hidden flaws before deployment. This proactive approach should become industry standard, with independent auditors holding companies accountable.

Transparency and Explainability in AI

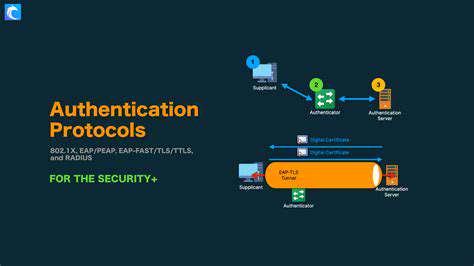

The black box problem goes beyond technical complexity - it creates democratic deficits. When AI denies someone parole or a loan, the recipient deserves more than the algorithm decided. Europe's GDPR regulations established a right to explanation, but implementation remains spotty. True transparency requires systems that can articulate their reasoning in human-understandable terms.

Accountability and Responsibility for AI Systems

As AI systems make more high-stakes decisions, our legal frameworks struggle to keep pace. When a self-driving car causes an accident, is the manufacturer liable? The software developer? The human passenger? We need new legal categories that recognize AI's unique characteristics - neither tools nor agents, but something in between.

Some jurisdictions are experimenting with AI liability passports that document decision-making processes for later review. Others propose mandatory insurance pools for AI developers. These experiments, while imperfect, point toward the hybrid solutions we'll need to govern autonomous systems.

The Impact of AI on Employment and the Workforce

The automation debate often focuses on job losses, but the deeper issue is power distribution. When AI augments workers, it can create better jobs; when it replaces them, it concentrates wealth. Scandinavian countries show another path: their tripartite systems bring unions, employers and government together to manage technological transitions. This collaborative approach has helped Denmark maintain high employment despite aggressive automation.

Looking ahead, we may need more radical solutions like lifelong learning accounts or reduced work weeks. The goal shouldn't be preserving obsolete jobs, but ensuring the benefits of automation are widely shared. After all, the point of technological progress isn't just efficiency - it's human flourishing.